- #Databricks workspace how to

- #Databricks workspace install

- #Databricks workspace full

- #Databricks workspace code

#Databricks workspace code

Please do not use this code in a production environment.

#Databricks workspace full

The full source code, JSON request, and YAML files are available in my Github repository. Hopefully, support for Python 3 (this code is based on Python 3) will become available in the near term. Python support for Azure automation is now generally available, though it’s just Python 2. The Python code can also be adapted to work within an Azure Automation Account Python runbook. I aim to continue expanding and updating this script to serve multiple use cases as they arise. bug("Exception occured with create_job:", exc_info = True) Json_request = json.load(json_request_file)ĭef create_cluster_req(api_endpoint,headers_config,data):

#Databricks workspace how to

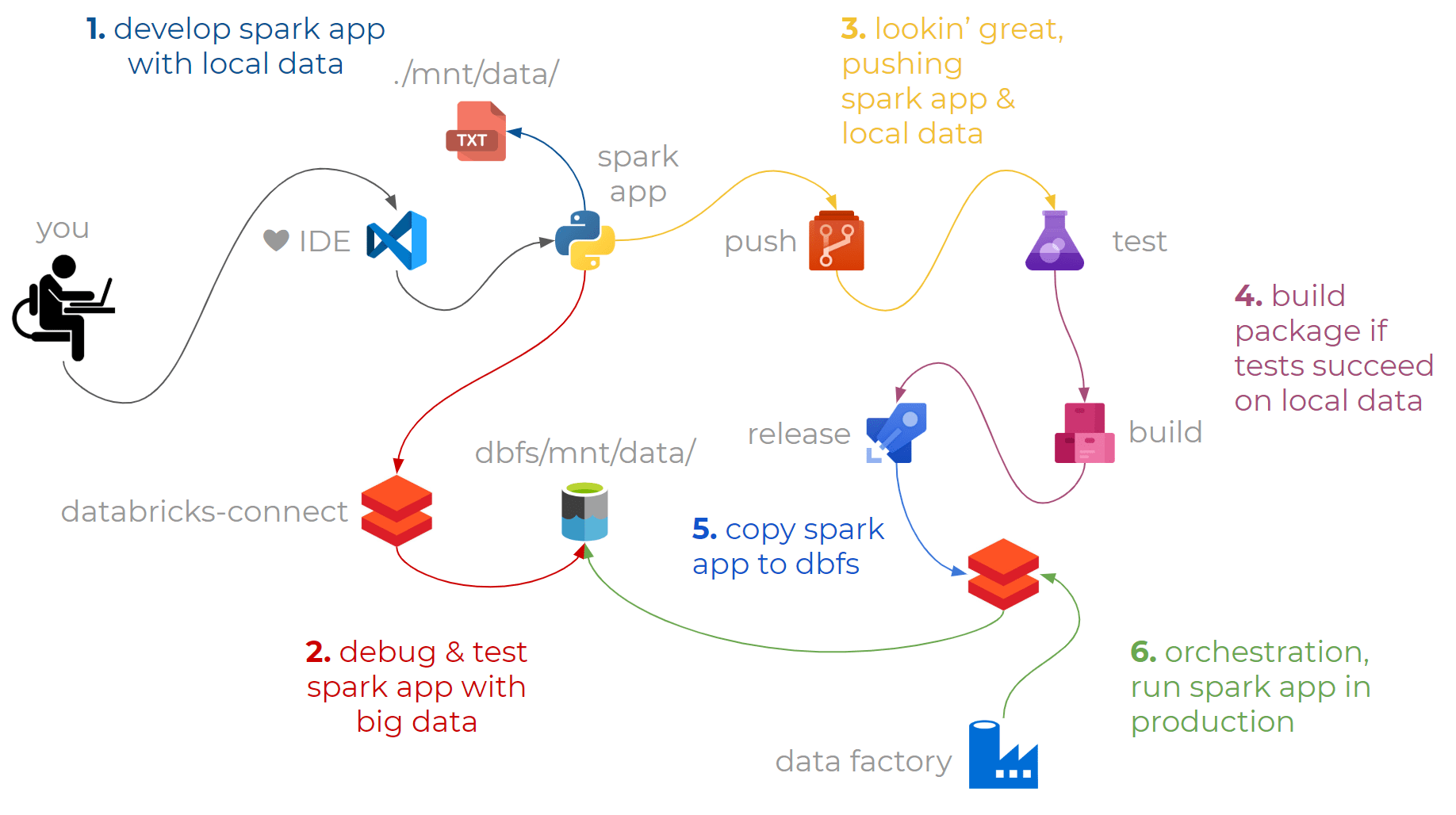

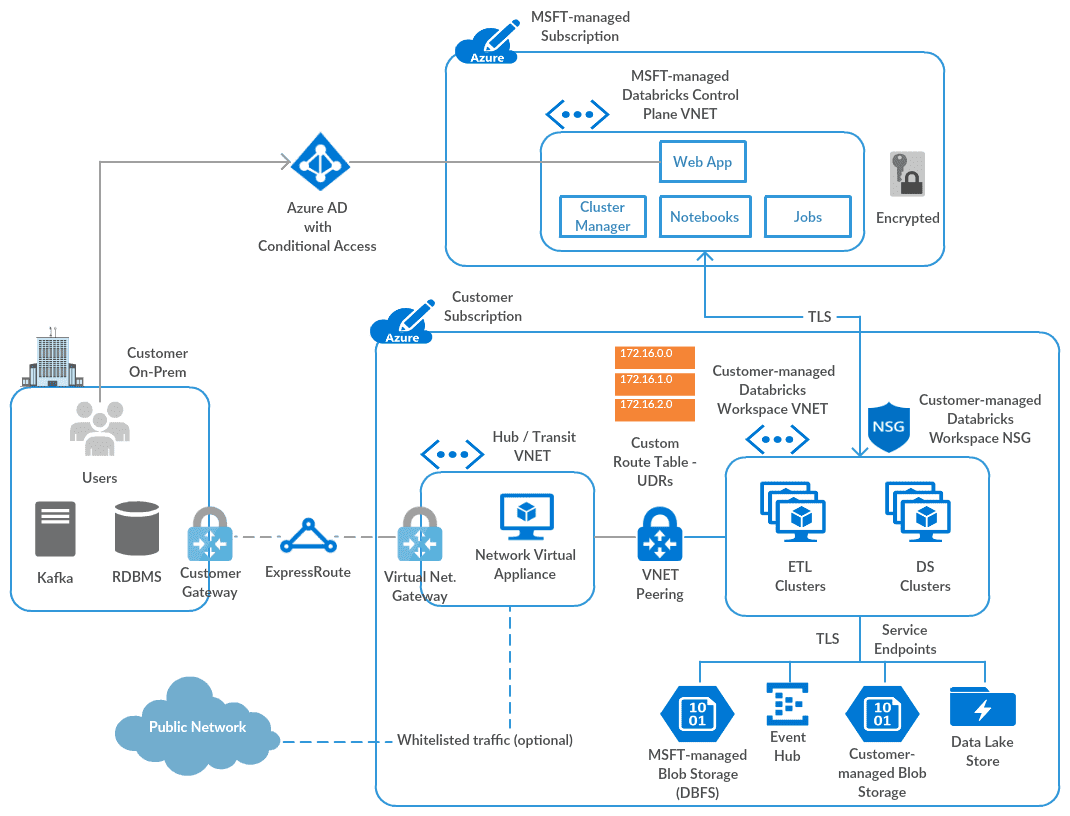

With open(json_request_path) as json_request_file: This article explains how to create a Databricks workspace using the AWS Quick Start (CloudFormation template). TEMPLATE_PATH = "Workspace-DB50\\databricks_premium_workspaceLab.json" YAML_VARS_FILE = "Workspace-DB50\\databricks_workspace_vars.yaml" The first function in the Python script read_yaml_vars_file(yaml_file) takes a yaml variable file path as argument, reads the yaml file and returns the required variables and values to be used for authenticating against the designated Azure subscription.įrom import ServicePrincipalCredentialsįrom import ResourceManagementClientįrom .models import DeploymentMode Simplify data ingestion and automate ETL. It has come in handy when there’s a need to quickly provision a Databricks workspace environment in Azure for testing, research and Data Exploration. Test-drive the full Databricks platform free for 14 days on your choice of AWS, Microsoft Azure or Google Cloud. The functions use a number of Azure Python third-party and standard libraries to accomplish these tasks. The following Python functions were developed to enable the automated provision and deployment of an Azure Databricks workspace and Cluster. The first set of tasks to be performed before using Azure Databricks for any kind of Data exploration and machine learning execution is to create a Databricks workspace and Cluster. To login to an azure databricks workspace using a user token:ĭatabricks configure -host MY_HOST -f token.Azure Databricks is a data analytics and machine learning platform based on Apache Spark. The dwt CLI is built using the databricks CLI sdk, and uses its authentication mechanism to login to a workspace. The tool can be installed to an azure cloud shell.

#Databricks workspace install

In a python 3.7 environment install this repository, e.g: Remove annotations for run cells from all notebooks in workspace.

Import_prefix: folder to import into (default: IMPORT) The process will remove annotations for run cells Path: location to output zip of notebooksĮxports all notebooks from a workspace as base64 source code. You can also use it to import/export multiple notebooks with this capability, in use cases where dbc export may not be possible due to volume limits. Dwt is a tool to clear run cells from notebooks, for example where there might be concern about data held in run cells, or as preparation for commit to source control.

0 kommentar(er)

0 kommentar(er)